Written By: Tim Ford -- 5/12/2009

Problem

Starting with Microsoft SQL Server 2005, DBAs were able to have a greater insight into the inner workings of their supported SQL Server instances through the use of Dynamic Management Views and Functions, collectively known as DMOs (Dynamic Management Objects.)

Some of the most-beneficial information concerns index usage. This metadata is collected by the Query Optimizer Engine and cached for querying via the sys.dm_db_index_usage_stats DMV as well as the collection of Missing Index DMOs (sys.dm_db_missing_index_groups, sys.dm_db_missing_index_group_stats, sys.dm_db_missing_index_columns, and sys.dm_db_missing_index_details.) There is a very critical shortcoming in the underlying process however; the information, since it is cached and not persisted in a physical table, is permanently lost once a SQL Server service restart occurs. In this three-part series I'll look at what you can do to persist this data so you don't find yourself waiting for usage stats to compile over a reasonable period of time just so you can harness the power of the indexing DMOs in your performance tuning process.

When determining how to persist the data I originally thought the process would be extremely straight-forward. On the surface one would think that you would simply a build code construct that would drop the persisted table if it exists, then do a SELECT...INTO from the DMV into a new physical table. Well, that would be great if you didn't care about losing all your persisted data after a service restart, but that would be exactly what would happen if this script was run after a service restart. Therefore the code is slightly more complex than that. This being Part One, we will focus on setting up the structure of that code, the necessary underlying objects, and the suggested process to make it all work for you in regards to the sys.dm_db_index_usage_stats that record information on which indexes are (and just as importantly are not being utilized.) Part Two will focus on a similar process for the sys.dm_index_operational_stats DMF. Part Three will wrap up this series with persisting a collected view from the four Missing Index DMOs.

Solution

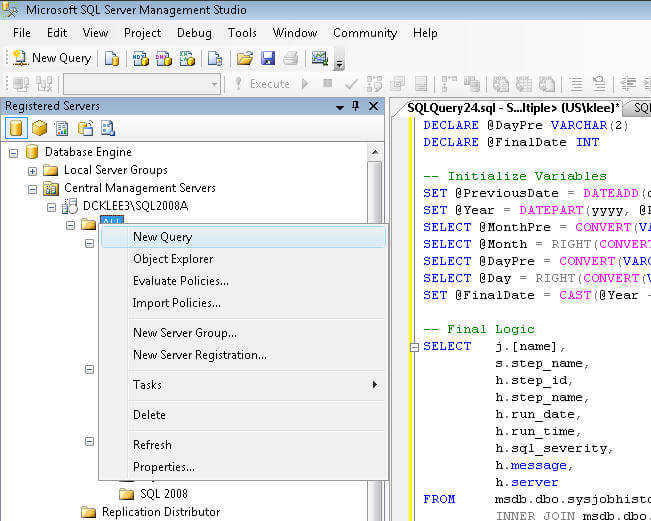

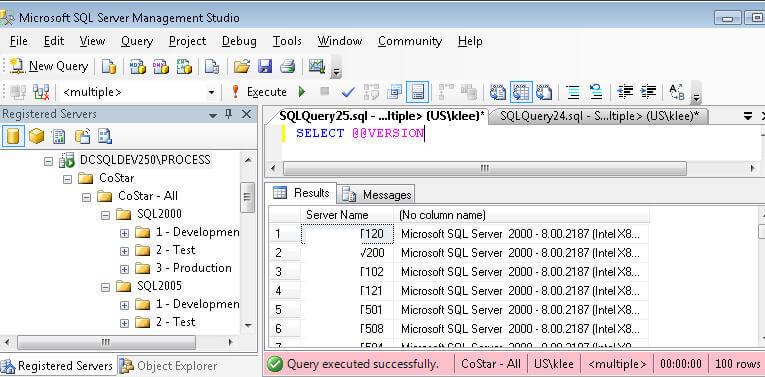

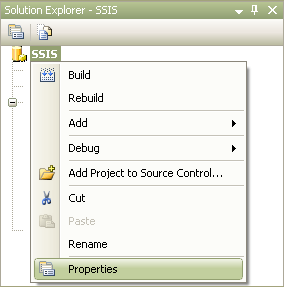

As I've stated before in many of the tips I've written here at MSSQLTips.com, I have a dedicated database on each of my SQL Server instances specifically for administrative objects I use in my daily tasks and automated administrative processes. It is here that I have created the necessary objects for the metadata persisting processes I'm outlining in this series. I'm providing the code below to create the database and all necessary objects we will be discussing, if you have your own dedicated administrative database please feel free to alter the code accordingly. As always, any code you see presented here should be thoroughly tested in a non-production environment before applying to a live production instance of SQL Server.

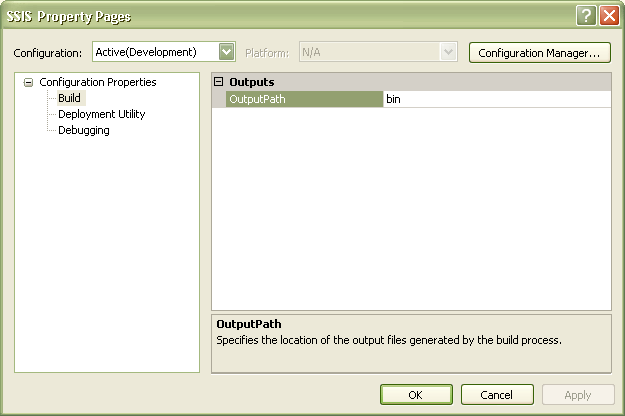

Step One: Create the Administrative Database and All Necessary Objects

I separate my database objects within my administrative database by schema. You'll see below that after creation of the database, I create a MetaBot schema that will serve as the schema for all objects of this process type.

USE [master] |

From here we only require two user-created database objects: the repository table that will persist the data (MetaBot.dm_db_index_usage_stats) and the stored procedure used to collect it. Let's first look at the structure of the table and I'll make my case as to why it's slightly more-complex than you would expect and why it's not normalized.

CREATE TABLE [MetaBOT].[dm_db_index_usage_stats]( |

The table is (for the most part) a duplicate in structure of the sys.dm_db_index_usage_stats Dynamic Management View with a few notable exceptions:

- collection of columns prefixed with "last_poll_"

- date_stamp column

The last_poll_... columns are vital for the calculations that go into the incrementing and updating of usage counts each time the stored procedure will run against sys.dm_db_index_usage_stats. If your instance never is subject to a service restart these columns would not be necessary, however we all know that is not the case. Even the best-constructed environments must still have routine maintenance that will require bringing the system down. The date_stamp column will record when the last refresh of MetaBot.dm_db_index_usage_stats occurred. It also serves to determine how the usage values are incremented in that it is compared to the last time the services were cycled. Depending on whether that occurred pre or post the last refresh occurred, a different calculation is used to update the related columns. We will go over those possible calculations after we look at the stored procedure code. The table I'll present should make this much clearer to you.

Speaking of the stored procedure code, I'm presenting it below.

USE iDBA |

The code is comprised of a few different logical sections:

- Variable declarations and value assignments

- Update of existing values with new usage counts

- Insertion of new rows associated with indexes that have, up to this point, not been used in any read or write processes.

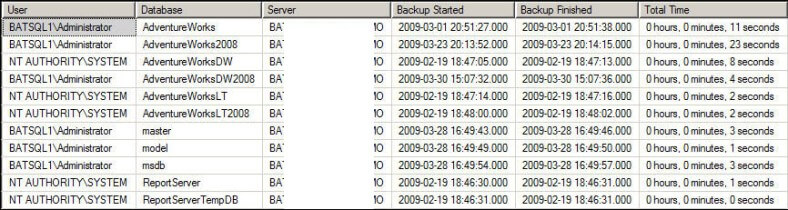

The two variables in the stored procedure determine which UPDATE script runs in the confines of the stored procedure. This is necessary, because the correct calculation for incrementing the index usage columns depends upon either the last_poll column pertaining to the specific user or system index action (seek, scan, update, or lookup) or the last persisted value for the corresponding usage column. The following table illustrates this issue much better than I can do in this limited space. M is the alias for MetaBot.dm_db_index_usage_stats, S is the alias for the sys.dm_db_index_usage_stats DMV.

| M.user_scans(existing) | M.last_poll (existing) | S.user_scans | M.user_scans (new) | Calculation | M.last_poll (new) | Calculation | |

| Initial Population (INSERT) | 0 | 0 | 10 | 10 | = S.col | 10 | = S.col |

| restart_date <> | 10 | 10 | 15 | 15 | = M.col + (S.col - M.last_poll _col) | 15 | = S.col |

| SQL Server service restart occurs, all cached values lost, increments reset to 0 | |||||||

| restart_date > last_date_stamp value | 15 | 15 | 5 | 20 | = M.col + S.col | 5 | = S.col |

| restart_date <> | 15 | 5 | 8 | 18 | = M.col + (S.col - M.last_poll _col) | 8 | = S.col |

When a unique record is first encountered it is inserted into the persisted table. A unique record is based upon the combination key of database_id, object_id, and index_id in the case of the sys.dm_db_index_usage_stats DMV. If this combination of identifiers already exists in the persisted table, the stored procedure relies upon the underlying determination of whether or not a system restart has been encountered since the last time the metadata was persisted. If it has not, then the usage columns are incremented by adding the existing value of that field to the difference between the new value from the DMV and the last value that was read from the DMV when the last run of the stored procedure executed. If a restart did occur, then the counters are all reset to 0. This means that the formula previously outlined would result in incorrect numbers being persisted. Instead you must take the sum of the existing column value and the corresponding value from the DMV as the new value to be persisted. In all cases the calculation for the last_poll column value will remain unchanged as will the code associated with the INSERT of new index usage rows.

You may notice that we do risk the loss of counter data. The only way to ensure that all usage data is persisted would be if this stored procedure would run prior to any service restart - not a viable option. However, unlike user data, we are using this metadata for metrics in determining whether an index is of any value - is it used significantly to offset the overhead of writes against it. So long as we record significant data over the lifetime of the instance we have no need to capture all usage values within reason.

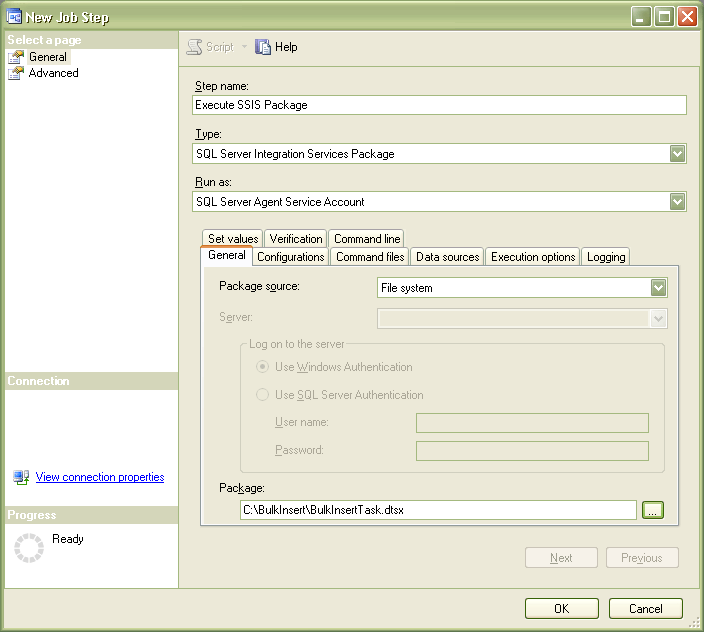

The final step would be to create a SQL Server Agent Job for the execution of this stored procedure. I'll leave that up to you. In our environment I run this process six times daily. It's very lightweight and I've not found it to interfere with normal user operations or backup cycles. I do not run it during the timeframe of scheduled index maintenance, but that is by choice on my part. I encourage you to test with schedules that fit your individual needs.

In the next tip of the series we will create a similar process for the sys.dm_db_index_operational_stats DMF, which needs to be handled in a slightly different manner as it is a function with the narrower scope of a database versus the instance scope of sys.dm_db_index_usage_stats.

Thank you: MS SQL TRIPS